| [1] |

高光涵. 总体应急预案的府际差异与量化评价:基于29个省级预案文本的比较分析[J]. 北京工业大学学报:社会利学版, 2023, 23(6):113-128.

|

|

GAO Guanghan. Differences and quantitative evaluation of intergovernmental overall emergency plans-comparative analysis based on the texts of 29 provincial plan[J]. Journal of Beijing University of Technology: Social Sciences Edition, 2023, 23(6):113-128.

|

| [2] |

冯双剑, 李尧远. 应急管理学科建设调查分析及建议[J]. 中国应急管理, 2022(8):66-77.

|

| [3] |

杨继星, 房玉东, 边路, 等. 应急救援数字化战场体系研究与应用探索[J]. 中国安全科学学报, 2023, 33(10):240-246.

doi: 10.16265/j.cnki.issn1003-3033.2023.10.2199

|

|

YANG Jixing, FANG Yudong, BIAN Lu, et al. Research and application exploration of digital battlefield system for emergency rescue[J]. China Safety Science Journal, 2023, 33(10):240-246.

doi: 10.16265/j.cnki.issn1003-3033.2023.10.2199

|

| [4] |

宋敦江, 杨霖, 钟少波. 基于BERT的灾害三元组信息抽取优化研究[J]. 中国安全科学学报, 2022, 32(2):115-120.

doi: 10.16265/j.cnki.issn1003-3033.2022.02.016

|

|

SONG Dunjiang, YANG Lin, ZHONG Shaobo. Research on optimization of disaster triplet information extraction based on BERT[J]. China Safety Science Journal, 2022, 32(2):115-120.

doi: 10.16265/j.cnki.issn1003-3033.2022.02.016

|

| [5] |

王浩畅, 刘如意. 基于预训练模型的关系抽取研究综述[J]. 计算机与现代化, 2023(1):49-57,94.

|

|

WANG Haochang, LIU Ruyi. Review of relation extraction based on pre-training language model[J]. Computer and Modernization, 2023(1):49-57,94.

|

| [6] |

WANG Lihu, LIU Xuemei, LIU Yang, et al. Emergency entity relationship extraction for water diversion project based on pre-trained model and multi-featured graph convolutional network[J]. Plos One, 2023, 18(10):DOI: 10.1371/journal.pone.0292004.

|

| [7] |

许娜, 梁燕翔, 王亮, 等. 基于知识图谱的煤矿建设安全领域知识管理研究[J]. 中国安全科学学报, 2024, 34(5):28-35.

doi: 10.16265/j.cnki.issn1003-3033.2024.05.0835

|

|

XU Na, LIANG Yanxiang, WANG Liang, et al. Research on knowledge management in coal mine construction safety field based on knowledge graph[J]. China Safety Science Journal, 2024, 34(5):28-35.

doi: 10.16265/j.cnki.issn1003-3033.2024.05.0835

|

| [8] |

LIU Xuemei, LU Hankang, LI Hairui. Intelligent generation method of emergency plan for hydraulic engineering based on knowledge graph:take the south-to-north water diversion project as an example[J]. LHB-hydroscience Journal, 2022, 108(1):DOI: 10.1080/27678490.2022.2153629.

|

| [9] |

BELKIN M, HSU D, MA Siyuan, et al. Reconciling modern machine-learning practice and the classical bias-variance trade-off[J]. Proceedings of the National Academy of Sciences, 2019, 116(32):15849-15 854.

|

| [10] |

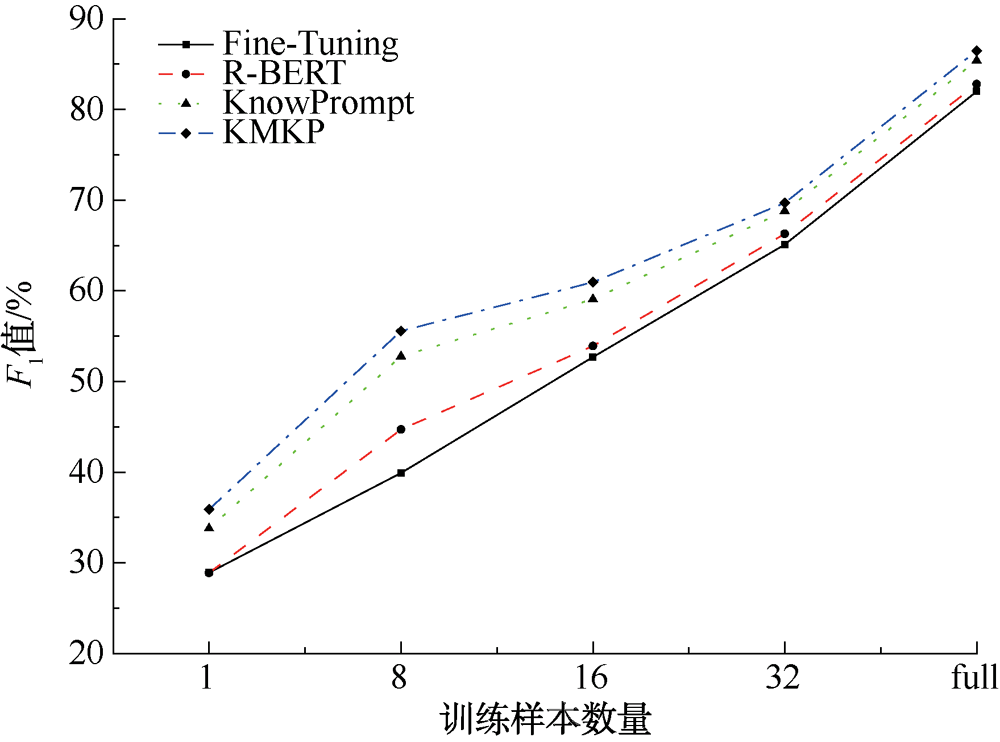

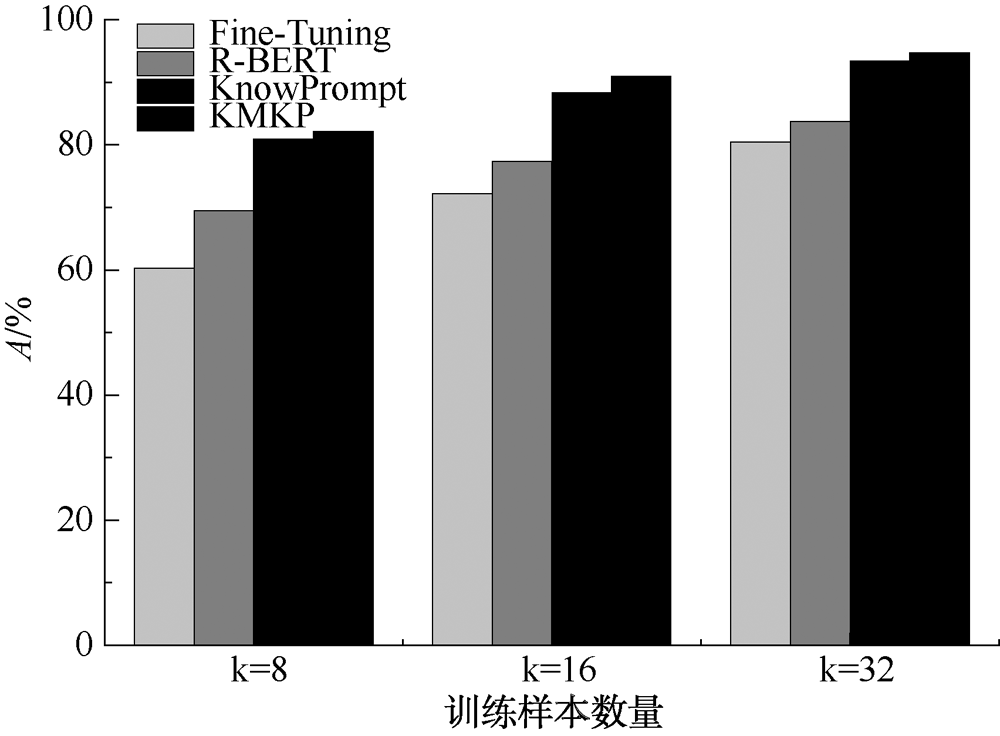

PENG Hao, GAO Tianyu, HAN Xu, et al. Learning from context or names? an empirical study on neural relation extraction[C]. the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), 2020:3661-3672.

|

| [11] |

ZHOW Wenxuan, CHEN Muhao. An improved baseline for sentence-level relation extraction[C]. the 2nd Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 12th International Joint Conference on Natural Language Processing (Volume 2:Short Papers),2022:161-168.

|

| [12] |

LIU Junbao, QIN Xizhong, MA Xiaoqin, et al. FREDA: few-shot relation extraction based on data augmentation[J]. Applied Sciences, 2023, 13(14):DOI: 10.3390/app13148312.

|

| [13] |

NASAR Z, JAFFRY S W, MALIK M K. Named entity recognition and relation extraction: state-of-the-art[J]. ACM Computing Surveys (CSUR), 2021, 54(1):1-39.

|

| [14] |

SHIN T, RAZEGHI Y, LOGAN R L, et al. Autoprompt: eliciting knowledge from language models with automatically generated prompts[C]. the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP),2020:4222-4235.

|

| [15] |

|

| [16] |

XU Benfeng, WANG Quan, MAO Zhendong, et al. kNN prompting: beyond-context learning with calibration-free nearest neighbor inference[C]. the 11th International Conference on Learning Representations,2023: 1-24.

|

| [17] |

HUANG Anzhong, XU Rui, CHEN Yu, et al. Research on multi-label user classification of social media based on ML-KNN algorithm[J]. Technological Forecasting and Social Change, 2023,188:DOI: 10.1016/j.techfore.2022.122271.

|

| [18] |

HENDRICKX I, KIM S N, KOZAREVA Z, et al. Semeval-2010 task 8: multi-way classification of semantic relations between pairs of nominals[C]. the 5th International Workshop on Semantic Evaluation, 2010:33-38.

|

| [19] |

ZHANG Yuhao, ZHONG Victor, CHEN Danqi, et al. Position-aware attention and supervised data improve slot filling[C]. Conference on Empirical Methods in Natural Language Processing, 2017:35-45.

|

| [20] |

黄子麒, 胡建鹏. 实体类别增强的汽车领域嵌套命名实体识别[J]. 计算机应用, 2024, 44(2):377-384.

doi: 10.11772/j.issn.1001-9081.2023020239

|

|

HUANG Ziqi, HU Jianpeng. Entity category enhanced nested named entity recognition in automotive domain[J]. Journal of Computer Applications, 2024, 44(2):377-384.

doi: 10.11772/j.issn.1001-9081.2023020239

|

| [21] |

WU Shanchan, HE Yifan. Enriching pre-trained language model with entity information for relation classification[C]. the 28th ACM International Conference on Information and Knowledge Management,2019:2361-2364.

|

| [22] |

XUE Fuzhao, SUN Aixin, ZHANG Hao, et al. Gdpnet: refining latent multi-view graph for relation extraction[C]. the AAAI Conference on Artificial Intelligence,2021:DOI: 10.48550/arXiv.2012.06780.

|

| [23] |

HAN Xu, ZHAO Weilin, DING Ning, et al. Ptr: prompt tuning with rules for text classification[J]. AI Open, 2022, 3:182-192.

|

| [24] |

CHEN Xiang, ZHANG Ningyu, XIE Xin, et al. Knowprompt: knowledge-aware prompt-tuning with synergistic optimization for relation extraction[C]. the ACM Web Conference 2022:2778-2788.

|

| [25] |

FEDUS W, GOODFELLOW I, DAI A M. Maskgan: better text generation via filling in the[C]. International Conference on Learning Representations, 2018:1-17.

|

| [26] |

WEI J. Good-enough example extrapolation[C]. the 2021 Conference on Empirical Methods in Natural Language Processing,2021:5923-5929.

|

| [27] |

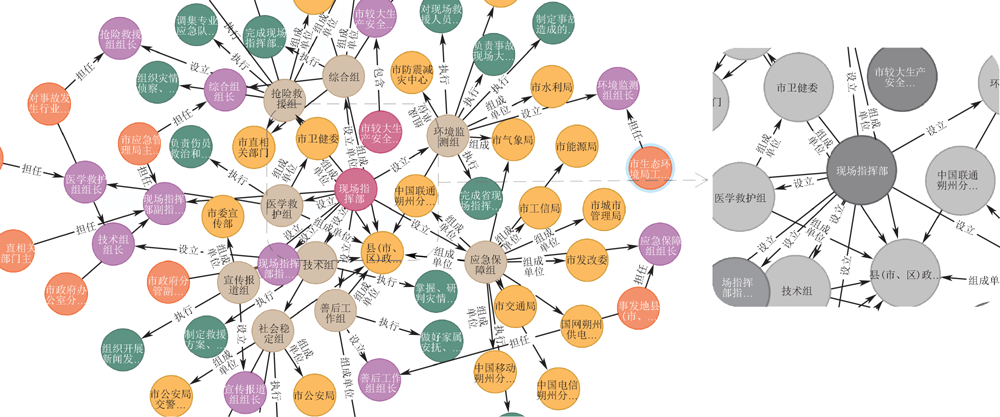

周义棋, 刘畅, 龙增, 等. 电网应急预案知识图谱构建方法与应用[J]. 中国安全生产科学技术, 2023, 19(1):5-13.

|

|

ZHOU Yiqi, LIU Chang, LONG Zeng, et al. Construction method and application of knowledge graph in emergency plans for power grid[J]. Journal of Safety Science and Technology, 2023, 19(1):5-13.

|